Transform bounding box to instance mask annotations using Segment Anything Model#

This tutorial covers how to convert bounding box annotations to instance mask annotations using Segment Anything Model (SAM). This feature requires a dedicated model inference server instance. We provide how to build the Docker image of model inference server for SAM in this guide. Please build the Docker image first before you follow this

tutorial. We will use the vit_b SAM model deployed on OpenVINO™ Model Server instance, thus you need to build segment-anything-ovms:vit_b Docker image in preperation. However, the other SAM model types (vit_l and vit_h) are avaialbe as well.

Prerequisite#

Download Six-sided Dice dataset#

This is a download link for Six-sided Dice dataset in Kaggle. Please download using this link and extract to your workspace directory. Then, you will have a d6-dice directory with annotations and images in YOLO format as follows.

d6-dice

├── Annotations

│ ├── classes.txt

│ ├── IMG_20191208_111228.txt

│ ├── IMG_20191208_111246.txt

│ ├── ...

└── Images

├── IMG_20191208_111228.jpg

├── IMG_20191208_111246.jpg

├── ...

However, for import compatibility, obj.names file must be added to d6-dice/obj.names filepath for import compatibility. This obj.names file includes the label names of the dataset, e.g., [dice1, ..., dice6]. Therefore, you can write it with the following simple code. Please see Yolo Loose format for more details.

[ ]:

# Copyright (C) 2023 Intel Corporation

#

# SPDX-License-Identifier: MIT

import os

root_dir = "d6-dice"

names = """

dice1

dice2

dice3

dice4

dice5

dice6

"""

fpath = os.path.join(root_dir, "obj.names")

with open(fpath, "w") as fp:

fp.write(names)

Launch the Model Inference Server for SAM#

In this tutorial, we will launch an instance of the OpenVINO™ Model Server to serve the SAM encoder and decoder models on your local machine. Alternatively, you can opt for the NVIDIA Triton™ Inference Server as well. For additional information, please refer to this guide.

To launch the OpenVINO™ Model Server instance and expose the gRPC endpoint on localhost:8001, use the following docker run command:

[ ]:

!docker run -d --rm --name ovms_sam -p 8001:8001 segment-anything-ovms:vit_b --port 8001

Transfrom bounding box to instance mask annotations#

We first import d6-dice dataset using Datumaro. Because it is the object detection task dataset, the original dataset has only bounding box annotations. We can convert the bounding box annotations to instance mask annotations using the SAM model. Datumaro provides this annotation transformation feature as follows.

[ ]:

import datumaro as dm

dataset = dm.Dataset.import_from("d6-dice", format="yolo")

dataset

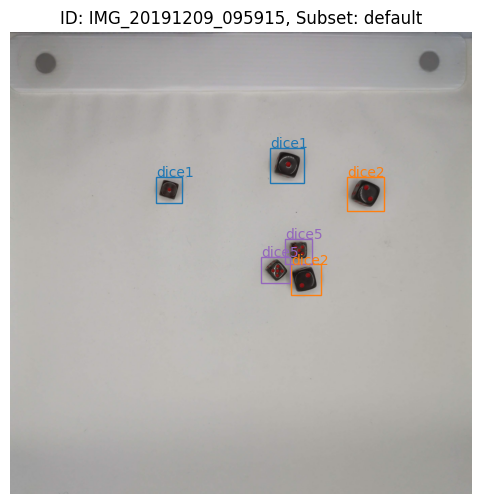

As we imagined, there are bounding box annotations in the dataset as follows.

[2]:

item_id = "IMG_20191209_095915"

subset = "default"

viz = dm.Visualizer(dataset, figsize=(8, 6))

fig = viz.vis_one_sample(item_id, subset)

fig.show()

Now, we apply SAMBboxToInstanceMask to the dataset. This transform requires several arguments to execute properly.

inference_server_type represents the type of inference server on which SAM encoder and decoder are deployed. In this example, we launched the OpenVINO™ Model Server instance. Therefore, please select InferenceServerType.ovms.

The gRPC endpoint address was localhost:8001. To configure this, provide the following parameters:

host="localhost"port=8001protocol_type=ProtocolType.grpc

You can also specify a timeout=60.0 value, which represents the maximum seconds to wait for a response from the server instance.

Additionally, you can choose to produce Polygon output for the instance mask. However, in this case, we have set to_polygon to False, resulting in an output of the Mask annotation type.

Lastly, we’ve set num_workers=0. This means we will use synchronous iteration to send a model inference request to the server instance and wait for the inference results. If you need to handle multiple inference requests concurrently, you can increase this value to utilize a thread pool. This is particularly useful when dealing with server instances that have high throughput.

[3]:

from datumaro.plugins.sam_transforms import SAMBboxToInstanceMask

from datumaro.plugins.inference_server_plugin import InferenceServerType, ProtocolType

with dm.eager_mode():

dataset.transform(

SAMBboxToInstanceMask,

inference_server_type=InferenceServerType.ovms,

host="localhost",

port=8001,

timeout=60.0,

protocol_type=ProtocolType.grpc,

to_polygon=False,

num_workers=0,

)

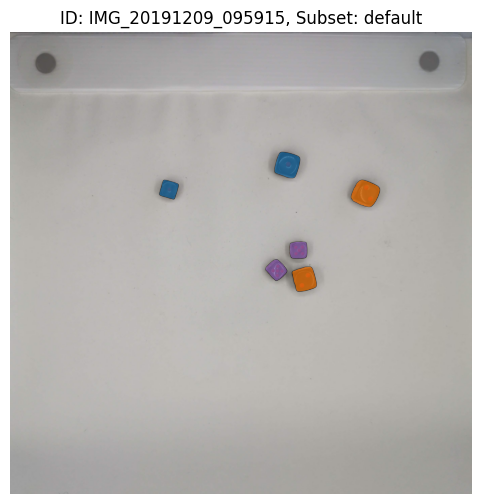

Let’s visualize the transformed dataset again. In the following figure, you can see that the bounding box annotations are converted to the instance mask.

[4]:

viz = dm.Visualizer(dataset, figsize=(8, 6), alpha=0.7)

fig = viz.vis_one_sample(item_id, subset)

fig.show()

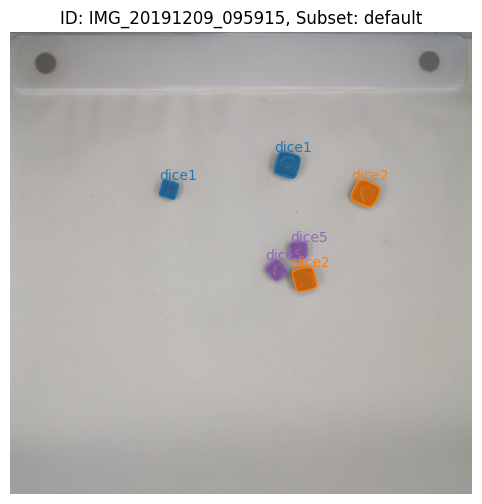

If you want to convert Mask annotation to Polygon even after the SAMBboxToInstanceMask with to_polygon=False, you can transform your dataset again using MasksToPolygons transform provided by Datumaro.

[5]:

from datumaro.plugins.transforms import MasksToPolygons

print("Before MaskToPolygons transform")

for ann in dataset.get(item_id, subset).annotations[:2]:

print(ann)

with dm.eager_mode():

dataset.transform(MasksToPolygons)

print("After MaskToPolygons transform")

for ann in dataset.get(item_id, subset).annotations[:2]:

print(ann)

Before MaskToPolygons transform

Mask(id=0, attributes={}, group=0, object_id=-1, _image=array([[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

...,

[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False]]), label=0, z_order=0)

Mask(id=1, attributes={}, group=1, object_id=-1, _image=array([[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

...,

[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False]]), label=0, z_order=0)

After MaskToPolygons transform

Polygon(id=0, attributes={}, group=0, object_id=-1, points=[912.0, 876.0, 909.0, 878.0, 907.0, 880.0, 906.0, 882.0, 904.0, 887.0, 902.0, 892.0, 901.0, 895.0, 899.0, 902.0, 898.0, 907.0, 898.0, 912.0, 897.0, 916.0, 896.0, 919.0, 895.0, 922.0, 893.0, 925.0, 892.0, 928.0, 891.0, 931.0, 890.0, 935.0, 889.0, 943.0, 885.0, 953.0, 885.0, 961.0, 886.0, 963.0, 887.0, 965.0, 888.0, 966.0, 890.0, 967.0, 892.0, 968.0, 894.0, 968.0, 901.0, 972.0, 904.0, 973.0, 909.0, 973.0, 913.0, 974.0, 920.0, 976.0, 934.0, 981.0, 963.0, 989.0, 970.0, 989.0, 972.0, 987.0, 973.0, 987.0, 974.0, 986.0, 975.0, 984.0, 977.0, 980.0, 978.0, 977.0, 979.0, 974.0, 979.0, 971.0, 980.0, 968.0, 981.0, 965.0, 986.0, 955.0, 988.0, 948.0, 990.0, 941.0, 992.0, 934.0, 992.0, 930.0, 993.0, 926.0, 996.0, 919.0, 997.0, 915.0, 997.0, 903.0, 996.0, 901.0, 993.0, 898.0, 990.0, 896.0, 987.0, 895.0, 982.0, 894.0, 977.0, 891.0, 974.0, 890.0, 967.0, 888.0, 956.0, 885.0, 948.0, 884.0, 935.0, 879.0, 931.0, 878.0, 926.0, 877.0, 919.0, 876.0, 912.0, 876.0], label=0, z_order=0)

Polygon(id=1, attributes={}, group=1, object_id=-1, points=[1606.0, 711.0, 1603.0, 712.0, 1601.0, 713.0, 1599.0, 714.0, 1595.0, 718.0, 1593.0, 721.0, 1592.0, 726.0, 1591.0, 728.0, 1590.0, 730.0, 1588.0, 732.0, 1587.0, 734.0, 1585.0, 739.0, 1581.0, 750.0, 1580.0, 753.0, 1578.0, 764.0, 1575.0, 770.0, 1574.0, 774.0, 1573.0, 778.0, 1573.0, 782.0, 1572.0, 786.0, 1569.0, 793.0, 1568.0, 798.0, 1567.0, 805.0, 1567.0, 826.0, 1568.0, 829.0, 1574.0, 835.0, 1577.0, 836.0, 1586.0, 840.0, 1605.0, 847.0, 1608.0, 848.0, 1618.0, 850.0, 1625.0, 853.0, 1634.0, 858.0, 1639.0, 860.0, 1642.0, 861.0, 1646.0, 862.0, 1650.0, 863.0, 1659.0, 864.0, 1671.0, 865.0, 1677.0, 864.0, 1681.0, 863.0, 1683.0, 862.0, 1688.0, 857.0, 1690.0, 854.0, 1693.0, 848.0, 1695.0, 843.0, 1695.0, 840.0, 1696.0, 837.0, 1699.0, 832.0, 1701.0, 827.0, 1702.0, 824.0, 1703.0, 817.0, 1706.0, 812.0, 1707.0, 809.0, 1709.0, 802.0, 1713.0, 786.0, 1713.0, 779.0, 1714.0, 773.0, 1716.0, 768.0, 1717.0, 761.0, 1717.0, 750.0, 1716.0, 747.0, 1713.0, 741.0, 1708.0, 736.0, 1705.0, 734.0, 1700.0, 732.0, 1695.0, 731.0, 1683.0, 725.0, 1680.0, 724.0, 1672.0, 723.0, 1669.0, 722.0, 1666.0, 720.0, 1663.0, 719.0, 1659.0, 718.0, 1650.0, 717.0, 1646.0, 715.0, 1642.0, 714.0, 1636.0, 713.0, 1621.0, 711.0, 1606.0, 711.0], label=0, z_order=0)

[6]:

viz = dm.Visualizer(dataset, figsize=(8, 6), alpha=0.7)

fig = viz.vis_one_sample(item_id, subset)

fig.show()

Consequently, we export this dataset to the COCO format as follow.

[8]:

dataset.export("d6-dice-inst-mask", format="coco_instances", save_media=True)